How Builders Can Leverage Technology to Develop Smart Labs

By: Jeff Talka and Todd Boucher

The utilization of technology in laboratories and life science facilities has increased its prevalence and impact over the past decade. Lab automation, for example, is expected to represent an $8.4 billion market by 2026, up from $4.8 billion in 2018. This rise in lab automation is driven by the need to remove the fragmented and manual configuration of historical lab environments, enabling more efficient management of experiments, tighter quality control, and a more comprehensive collection of analysis and data.

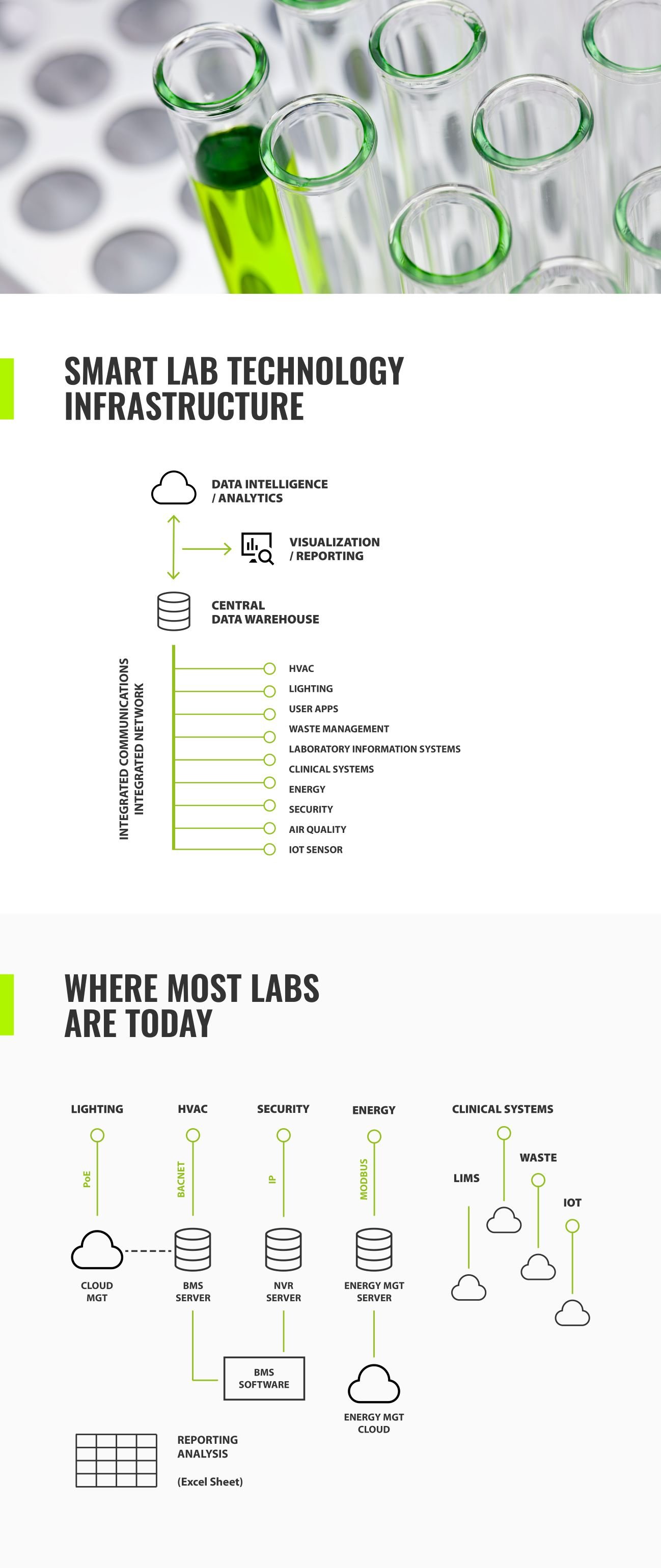

Technology-rich laboratory environments are improving to meet the challenge of historically fragmented and manual configurations and processes; however, the building technologies supporting these mission-critical experiments are behind in design, integration, and connectivity.

Higher levels of automation in lab equipment and associated support technologies drive a greater need for robust and reliable connectivity in life science facilities. Combine the density of lab technologies with Internet of Things (IoT) building devices to monitor items like Indoor Air Quality, energy consumption, occupancy, or light levels, and the reliance on network-connected systems to deliver high-performing lab spaces has never been greater.

Complexity is rising rapidly in both laboratory experiments and the facilities systems that support them. Lab managers address this complexity through increased investment in integration and access to detailed data sources. However, the facility and engineering teams responsible for operating life science facilities are still managing siloed building systems that lack the integration and data accessibility required to support next-generation lab experiments. As a result, life sciences facilities miss a critical opportunity to run more efficiently, productively, and profitably.

Leveraging technology

The most prevalent technology in the laboratory setting for data capture is the laboratory information management system, or LIMS. The electronic notebook, if you will. Other technologies include building automation systems (BAS), which monitor and control the environmental parameters of the labs and supporting spaces. Add-on programs such as Aircuity assist in making the lab environment safe and energy-efficient. There is also an approach to environmental control called risk-based ventilation. Innovation continues in lab design for environment control and monitoring, and the industry should expect to see vast improvements for years to come.

But how can we leverage available technology to improve data capture and management, reducing the steps required for these tasks and with fewer errors? In the healthcare industry, to comply with HIPAA regulations and increase productivity, initial clinical measurements such as weight and blood pressure are taken and directly recorded in a patient’s electronic health record (EHR) files, eliminating the need to record and input manually. So there is no second handling of information. The technology does exist to accomplish this same task in the lab environment—we will elaborate on this later.

Several factors drive the research environment:

How science is conducted (empirical, computer-based, high-throughput evaluation)

The social interaction of the participants (scientific culture)

The natural environment in which the science is conducted (urban, suburban, university-based)

Cultural makeup of the research team

Scientific typologies

By leveraging the information obtained on these fronts, we can project a scenario or scenarios which can accommodate the security requirements while providing a framework for unimpeded scientific discovery.

And as we move towards a more computer-supported research paradigm, what are the physical ramifications of these measures? It would appear that research would be more and more computer-based with wet chemistry to support proof of concept. Smaller lab areas and collaboration zones could be geared towards cyber transfer and direct chemical/biological interpretation. These two paradigms have significant environmental and hygienic consequences. As we move towards a more technologically driven research mechanism, it is appropriate to say that the environments that conduct research will reflect these paradigms.

Much discussion lately has been around COVID-19 and how the workplace will respond in its aftermath. Research laboratories have been populated by their very nature so that social distancing is inherent. But of course, this may change. We would suspect that wet labs and what we would call confirmational labs would still require staff to be physically integrated in the execution of their work.

Informational technologies will enable researchers to conduct their work in new and innovative ways. Computer-based research is more focused than the once-standard empirical method. The physical parameters of research facilities will be defined by the reliance on technology. Environmental parameters such as air exchange rates and temperature and humidity control will reflect the methods of science, and the controls for these parameters will be incorporated into the same systems to capture data from experimentation. The data sets are holistic, representing the actual results from procedures and reflective of the environments in which they were developed.

Systems integration and data analytics

Lab automation improves the quality of data collected in the lab and increases the amount of data available that can inform successful experiments. Most critically, this includes information on the optimal conditions for experiment success. This data provides an opportunity to create more effective, responsive lab facilities by combining lab data streams with data from the built environment. Few facilities can extract and analyze data from their building technologies because of how those systems are designed and operated.

Building technology design in many life science facilities is fragmented; critical systems like building management systems (BMS), access control, lighting controls, energy management, and more are designed and implemented in silos with their own software instances, monitoring platforms, and control programs. Without forethought to integrate these building technologies, data cannot flow between them, leaving facility and operations teams without access to valuable data from these mission-critical systems.

Systems integration in the built environment allows facility and operations teams in life science facilities to follow the precedent set by lab automation. By facilitating data flow between critical technologies in the facility, operations, and engineering, leaders can create data-driven operations that leverage trends and predictive analytics. More importantly, combining data streams from the built environment with data from laboratory equipment unlocks the potential of smart lab facilities where computing, data analytics, and machine learning can be leveraged to understand, predict, and maintain optimal conditions for laboratory operations and certainty of outcome.

Data analytics and machine learning are critical technologies for creating the smart lab because they enable the facility to learn. By constantly analyzing lab data and facility data, today’s analytics engines can recognize patterns, predict performance issues, and make recommendations for optimal conditions. Similar to how lab automation eliminates human error from the recording process, analytics for the built environment eliminates the need for engineering and operations personnel to manually review energy and systems performance data in an attempt to identify ways to improve efficiency, proactively identify potential risks, and deliver optimal, secure conditions in lab environments.

For many facilities and operations leaders today, the idea of adopting data analytics for systems in the built environment may seem intimidating. Most facilities still operate in a reactive model; they react to alarms and alerts from critical systems in an ongoing attempt to keep the facility performing at optimal levels. While these facilities organizations see value in a more predictive, proactive model, it is difficult for them to visualize the path toward recognizing that operational mode.

The first step to creating conditions where smart labs leverage data analytics is to integrate systems in the built environment more purposefully. Each owner’s requirements set will vary for which systems are meaningful to integrate and the outcomes they desire. However, the foundational components that owners should consider when developing systems integration objectives are:

Create a shared and open network infrastructure that all building technologies can leverage instead of each building system having its own siloed network backbone.

Establish owner’s integration requirements and the associated outcomes with those integration needs. Most notably, to identify:

What protocols and languages to use to facilitate communications between building systems

Create systems architecture designs that outline how building systems will communicate with each other. What protocols to leverage, what naming schemas to utilize, and what data will be required to pass between systems?

How will data be extracted, in what format will it be communicated, where will it be stored, and how will it be accessed?

Detailed cybersecurity requirements associated with each building technology

Creating a smart lab facility that leverages systems integration and data analytics creates a critical alignment. It treats data from the built environment with the same care and value as data from the laboratory, giving owners the ability to leverage that data to optimize the conditions in their facility, continually. By doing so, smart labs are both more responsive and informed, driving up productivity, energy efficiency, and profitability.

Summary

The manner through which we gain scientific knowledge has evolved; research is faster and more deliberate than ever before. Researchers today have access to everything from high throughput operations that can test molecules for efficacy to computer based technologies capable of predicting outcomes before physical synthesis is needed. However, today’s smart lab must effectively capture this fast-paced flow of information, define it for meaningful use, and create alignment between building technologies and scientific platforms in a way that improves quality, efficiency, and profitability.

Our observation is that there are numerous technology platforms which support and direct scientific discovery for the common good, and that the effectiveness of those platforms can be increased through better utilization of integrated, responsive building technologies. As the nature of research continues to be driven by technology, lab facility, operations, and engineering managers need to equally leverage technology in the built environment to improve research-based outcomes and building performance.

Jeff Talka is science and technology director at The S/L/A.M Collaborative, and Todd Boucher is founder and principal of Leading Edge Design Group (LEDG).